Introduction

In version 1.3 of RealBridge we added double-dummy analysis. This isn’t anything new, and we used the standard piece of open-source software – but we did it in a “non-standard” way, and the details might be useful to others.

In particular, this post explains how to run a specific bit of open-source software – the DDS library, written in C – inside a web browser. It will be of particular interest to anyone developing web-based bridge software, but of more general interest to web developers interested in client-side deployment of software written in non-web-native languages such as C or C++.

The Double-Dummy Solver library (DDS)

The DDS library, written by Bo Haglund and Soren Hein (with contributions from others) is a well-optimised open-source double-dummy solver, the purpose of which is to evaluate how good different choices of play are during bridge deals.

A bridge deal has four hands of thirteen cards each, and the four players play as two teams of two, or partnerships. During the play of a deal, one of the players becomes dummy and their hand is placed face-up on the table. The three remaining players can see twenty-six of the cards (their own hand, plus the dummy), and the other twenty-six are hidden.

This partial-information or uncertainty is what makes the game interesting – but it also makes analysing the play extremely challenging. A simpler problem – and one that has the advantage of a definite answer – is what the best play would be if you knew the complete layout of all the cards in advance. This is what “double-dummy” refers to – it’s simply an obscure way of saying “everyone knows the whole hand”.

Under that condition, there’s a single double-dummy result, which is what happens if everyone makes the best choice possible at every turn.

Given a deal, a trump suit and a declarer, the DDS library can efficiently compute the double-dummy result. Moreover, given the current state of play at any point during a deal – and assuming perfect play thereafter – it can tell you the eventual result of each of the legal cards you could play. That is, it can tell you how good each of the choices is at any point.

Play recaps with double-dummy analysis

When reviewing what happened during a game of bridge, players like to be able to check the double-dummy analysis – it can help identify errors, which can help you learn to avoid them in future. Now, not every double-dummy error is actually a mistake – you can make the correct with-the-odds play and lose to an inferior play that happens to work this time. This is part of the fun of the game! With enough luck, anything is possible…

Nevertheless, double-dummy analysis is a useful tool to have in your bag, and it’s much easier to use software that can compute it in a fraction of a second than try to work it out by hand.

DDS as a web service

Existing bridge sites offering double-dummy analysis usually wrap up DDS, or other software with equivalent functionality, as a web service. That is, the client makes a request to a server, the processing happens on the remote server, and then the server sends the results back to the client to display to the user.

This used to be the only way to expose the capabilities of library software to browsers, but it comes with some drawbacks. The main one is the latency of response: given that a DDS solve of a full hand takes around 50 milliseconds, the round-trip latency to the server and back is likely to be a significant fraction of the total time. If the client and server are far apart – for instance, on different continents – the communication latency will far exceed the processing time.

Another disadvantage is that the service provider has to provide a server! There is a potential scaling problem inherent in this – it’s likely that double-dummy queries will be an irregular workload (eg, when lots of players finish sessions at the same time, and then all look through the deals).

There’s another more subtle point about scaling and performance. The DDS solver has some built-in caching of deal-positions and results. When it explores the full “game-tree” of possibilities for a deal, equivalent positions will come up multiple times, and it’s much faster to be able to look up results we’ve already computed than recompute the same thing multiple times.

Now, the library is clever enough to reuse this information across separate queries. If an incoming query comes from the same deal, with the same trump suit, the solver can simply reuse results already in its cache. This means that in the case that several consecutive queries are about the same contract and line-of-play, the later queries will return near-instantly, with no work to do.

This means that if, somehow, each client had its own private version of DDS to use, its queries would be much more efficient – since typical use is to make lots of queries about the same deal consecutively. Whereas if we have one centralised server processing requests for lots of users, it’s much harder to efficiently reuse data without doing significant extra engineering.

All this points towards one thing: what we really want to do is run the DDS library locally, on the client’s machine, separately for each user. While a few years ago this would have been a pipedream, more modern technologies make it pretty straightforward to get the best of both worlds – faster and more efficient for the user, and simpler and easier for the service provider!

Compiling with Emscripten

If we suppose our use case is a webpage or web app, this means we want to run DDS directly in the client’s browser. To do that, we can’t compile the C code to a binary – because the binary will depend on the OS and hardware it’s compiled on (or for). We need something else, something platform-independent that browsers understand. Fortunately, there are standards and software out there that can help us!

Emscripten is a tool for turning C or C++ into JavaScript. More specifically, it’s a compiler toolchain that uses LLVM to compile C/C++ to WebAssembly.

Emscripten itself has been around for a relatively long time – the project started in 2010 – and was a big reason for the standardisation process that created WebAssembly. Because it’s a standard, and supported in all major modern browsers, WebAssembly is now a mature technology.

Once the toolchain is installed, compiling a simple C program with Emscripten is straightforward:

emcc main.c

This will emit two files: a.out.js and a.out.wasm

The WASM file is our code, compiled to WebAssembly – instructions for a virtual machine that browsers know how to execute efficiently. The JavaScript file contains the “glue code” that allows us to interact with the compiled functionality from JS.

We can run our compiled code as a console application via the Node.js runtime. The Emscripten toolchain bundles Node.js with it, so all we need to do is:

node a.out.js

Without any further instructions, Emscripten will simply generate the executable consisting of whatever the C main() function does. The JavaScript glue will then execute this main() functionality and then exit.

This isn’t exactly what we want to achieve – we have library functionality that we want to call on-demand, multiple times. That is, we don’t want the JS module to exit, we want it to stay alive indefinitely. Fortunately, with a bit more work and some handy compiler options we can do this too.

Compiling DDS with a C-function wrapper

We want to call two DDS functions from JavaScript:

- SetResources – function to initialise the system.

- SolveBoardPBN – function to solve a deal or position.

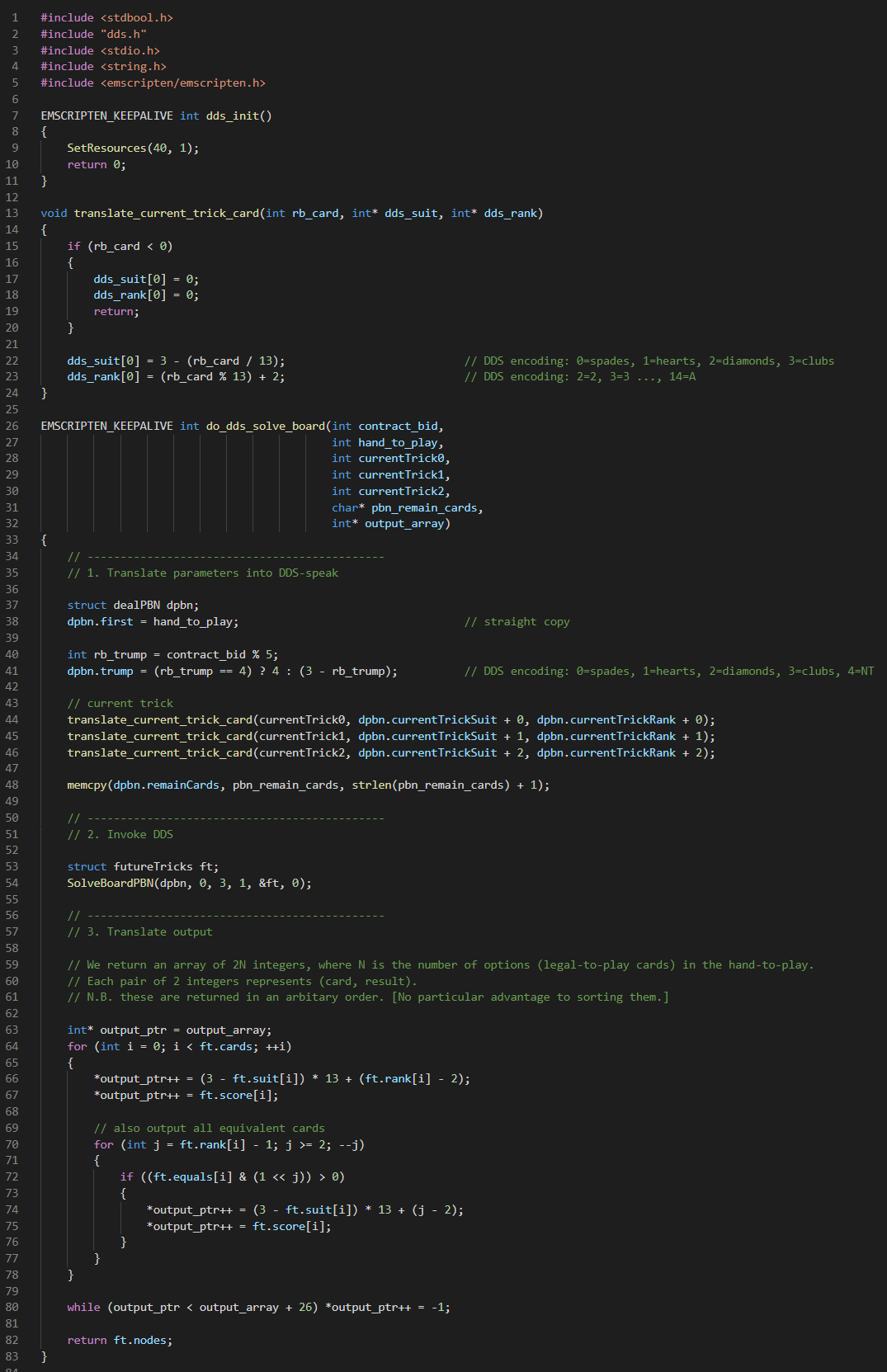

It’s convenient to write a small C-wrapper to translate parameters into the form expected by DDS. Ours looks like this:

Things to note are:

- We decorate the C functions we want to call from JavaScipt with the EMSCRIPTEN_KEEPALIVE macro (defined in emscripten.h). This tells the compiler not to strip out that function from the compiled output.

- All of the work in the do_dds_solve_board function is simple translation of the input and output data. We write the output into an array of 26 integers, since this is easy to read and write on the JavaScript side.

- We tell the compiler to generate JavaScript that doesn’t run-and-then-exit. We do this by adding -sNO_EXIT_RUNTIME=1 to the command line.

We can now try to compile DDS!

emcc -sNO_EXIT_RUNTIME=1 <dds-source-files> main.c

We get a few compiler errors, so there are a handful of places we need to modify the DDS code to compile with Emscripten:

- There are some string-related functions in Par.cpp that Emscripten complains about. Fortunately, we don’t need any of the functions in Par.cpp for our purposes, so we can simply leave that file out.

- In dds.h, Emscripten is fussy about the declarations of structs containing other structs, so we need to add the word struct a few times.

To actually run the code successfully, we also need to modify the System::GetHardware function. In normal operation, this detects the number of cores and free memory available in the system. The idea is to use all of the resources in the system when solving multiple deals in parallel.

However, we only want to solve one deal at a time, so we can hard-code the GetHardware function to report a single core and 50MB of memory. This will cause DDS to run in single-threaded mode, with one of its “small” threads.

To get this to work, we also need to tell Emscripten to increase the amount of memory available to be allocated (from the default of 16MB):

emcc -sINITIAL_MEMORY=52428800 -sNO_EXIT_RUNTIME=1 <dds-source-files> main.c

Testing the code from JavaScript

We’ve successfully compiled the code, but can’t do anything useful with it yet – first we need to tell Emscripten which functions we’re going to call from JavaScript:

emcc -sINITIAL_MEMORY=52428800 -sNO_EXIT_RUNTIME=1 -sEXPORTED_FUNCTIONS="_free,_malloc,_do_dds_solve_board, _dds_init" -sEXPORTED_RUNTIME_METHODS="getValue,ccall,allocateUTF8" <dds-source-files> main.c

Here, the EXPORTED_FUNCTIONS are the C functions we want to call. Note that each of them gets a leading underscore character when exported. On the other hand, the EXPORTED_RUNTIME_METHODS are JavaScript script functions generated by emcc in the a.out.js file. More specifically, we use getValue to read the 32-bit integers in the generated output array, and we use allocateUTF8 to convert a JavaScript string containing the deal information into a C-style string for input to SolveBoardPBN.

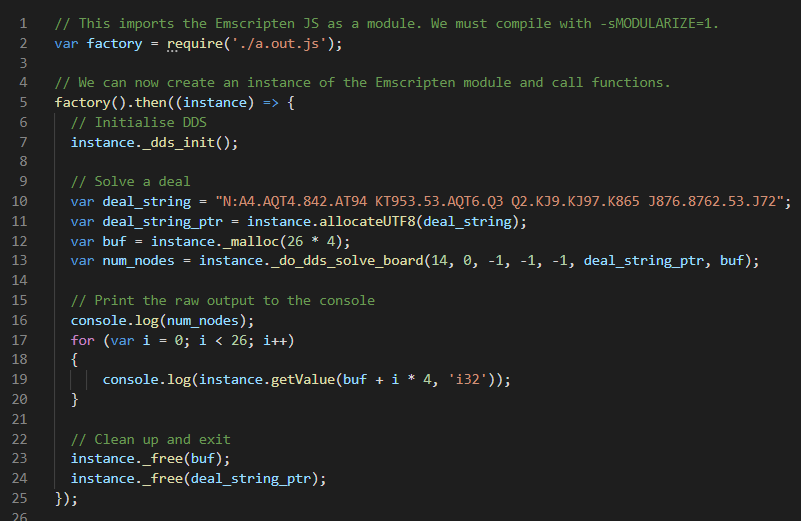

With all of that done, we can write a simple test case to make sure it works:

As noted in the comment, to import the Emscripten JavaScript as a module, we need to compile it with -sMODULARIZE=1. This is convenient for this little test, which we can run via Node.js on the command line.

The test does a single double-dummy solve of the given deal, returning 26 integers. Each pair of integers is of the form (card, result): the eventual double-dummy result of playing each possible card.

Running DDS in a WebWorker

A more likely useful scenario is to run DDS inside a webpage. Although it’s fast, the processing for a double-dummy solution isn’t instant, so it would be best to use a separate thread to do the work – that way we don’t cause any hitches on the main processing thread.

Happily, using a WebWorker makes this very simple. Here we have to compile without –sMODULARIZE=1, but then we can use this useful function at the top of our WebWorker:

importScripts("./a.out.js");

(The importScripts function is part of the WebWorker API.)

This defines a variable Module, and we can subsequently call our functions through it, eg:

Module._dds_init()

Of course, the exact details of the WebWorker will depend on the app or webpage it’s communicating with.

Conclusion

Incorporating library functionality into web-based software used to require wrapping software as a web-service and providing servers to run it – but with modern compilation tools and browser capabilities we can now run more types of software directly. This can make developing fully-featured web experiences both quicker and simpler.

![Rendered by QuickLaTeX.com \[ =\frac{1}{4}\left( \frac{1}{\pi} \int_{H+}\vec{\boldsymbol{n}}\!\cdot\!\vec{\boldsymbol{\omega}}\, R(\vec{\boldsymbol{\omega}}) \, d\Omega +\frac{1}{\pi}\int_{H-}\vec{\boldsymbol{n}}\!\cdot\!\vec{\boldsymbol{\omega}}\, R(\vec{\boldsymbol{\omega}}) \, d\Omega \right) \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-f8073d3ab4c779eb128488c89b65b57b_l3.png)

![Rendered by QuickLaTeX.com \[ I(\vec{\boldsymbol{n}}) = \begin{cases} 4 (\vec{\boldsymbol{d}} . \vec{\boldsymbol{n}}) & \text{if}\ \vec{\boldsymbol{d}} . \vec{\boldsymbol{n}} \ge 0 \\ 0 & \text{otherwise} \end{cases} \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-2e34ad873e469d7b70cf68033bcac2e6_l3.png)

![Rendered by QuickLaTeX.com \[ p = 1 + 2 \frac{| \vec{\boldsymbol{R}}_1 |}{R_0} \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-e6b45e4e36c968a48bf725a94d3d67e2_l3.png)

![Rendered by QuickLaTeX.com \[ a = (1 - \frac{|\vec{\boldsymbol{R}}_1 |}{R_0}) / (1 + \frac{|\vec{\boldsymbol{R}}_1 |}{R_0}) \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-860d271bdf9edd6045d82597c4ff8ee8_l3.png)

![Rendered by QuickLaTeX.com \[ Y_{\ell }^{m}(\theta ,\varphi )=(-1)^{m}{\sqrt {{(2\ell +1) \over 4\pi }{(\ell -m)! \over (\ell +m)!}}}\,P_{\ell }^{m}(\cos {\theta })\,e^{im\varphi } \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-fc512261f6894369c31f82ad70e344be_l3.png)

![Rendered by QuickLaTeX.com \[ Y_0^0 (\vec{\boldsymbol{\omega}}) = \frac{1}{\sqrt{4\pi}} \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-4c67d3fc808e07dd888f106a5c3e236f_l3.png)

![Rendered by QuickLaTeX.com \[ R_0 = \oint R(\vec{\boldsymbol{\omega}}) Y_0^0 d\Omega =\oint \alpha \frac{1}{\sqrt{4 \pi}} d\Omega = \sqrt{4\pi} \alpha \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-8d4fb363148cf21782475d0a19e3d246_l3.png)

![Rendered by QuickLaTeX.com \[ R_{sh}(\vec{\boldsymbol{\omega}}) = R_0 Y_0^0 = \frac{\sqrt{4\pi}\alpha }{\sqrt{4\pi}} = \alpha \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-8c51785a54f8385481ffa8b4bcc250f7_l3.png)

![Rendered by QuickLaTeX.com \[ R_0 = \frac{1}{n} \sum_{i=1}^{n} R(\vec{\boldsymbol{\omega}}_i) \quad \mapsto \quad R_0 = \frac{1}{4\pi} \oint R(\vec{\boldsymbol{\omega}}) \, d\Omega \]](https://grahamhazel.com/blog/wp-content/ql-cache/quicklatex.com-80dfa443d7a618bf08af8fb3eb5c7496_l3.png)